The Scaffolded Sound Beehive is an immersive installation in the form of a beehive. The viewer puts his or her head inside and experiences a visual and auditory artistic interpretation of hive activity.

The Scaffolded Sound Beehive is part of a series of ecological instrumented beehives leading towards a fully biocompatible intelligent beehive. The Scaffolded Beehive allows the artist and the members Brussels of the Urban Bee Laboratory to study the tight interaction between city honeybees and urban ecosystems, using artistic research practices and in collaboration with scientists.

The human-size beehive is constructed using open source digital fabrication (fablab) and mounted on scaffolds. The hive is 2.5 m high so that visitors can put their head inside it and experience an auditory artistic interpretation of hive activity, making this an interactive immersive installation.

By introducing microphones inside the real beehive in our field laboratory, we have been developing

a monitoring device that is based upon the continuous scanning of the bee colony’s buzz. The soundbehaviour of the bee colony was recorded for a complete season. We started to work with the recordings and processed the original files with sound software.

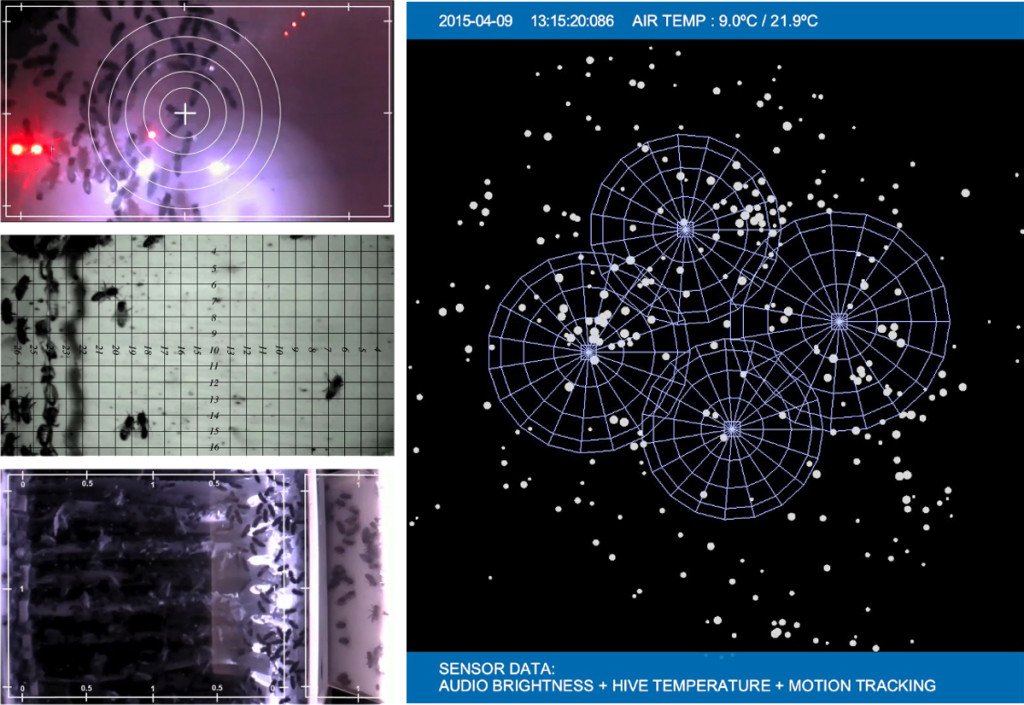

We also have been adding infrared cameras and video monitoring, which gives us a full spectrum of possibilities for observing the behaviour of the bee colony and to conduct environmental surveillance.

The Scaffolded Sound Beehive is an exhibition platform on which the eco-data that were gathered during one bee-season are made public. It is a prototype for slow art sonification and visualization. The 8-channel soundinstallation inside the sculpture are elaborations of the field recordings made in the broodnest of the real beehive.

AnneMarie Maes was working with the sound artists Givan Bela and Bill Bultheel on different rendering of the fieldrecordings.

The video screens in the Scaffolded Sound Beehive show how AI techniques can be used to enhance our experience of the natural world by enhancing sounds and images with artificially generated structures, and by visualizing the deeper categories that machine learning algorithms can detect in sensor data.

visualization of the audio data (audio loudness) in combination with the hive temperature and the motion tracking of the hive activity on the landing platform.

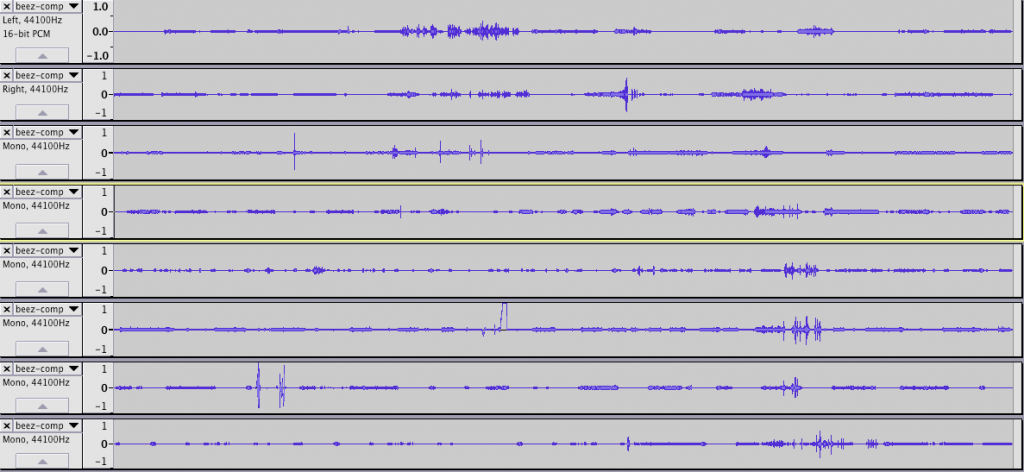

Analysis & synthesis by Bill Bultheel: Sound Bees

The beehive recordings pan over the spectrum of a day (from midnight to midnight). As a compositional principle different ideas around swarm formation were investigated.

The increase and decrease of swarm activity in the hive and its influence on its sound, became a guideline for the transformation of the recordings. Hereby using natural phenomena as musical tools and in retrospect, using musical tools as an artistic rendition or analysis for natural phenomena.The audio work tries to embody the bee swarm while simultaneously intersecting the swarm with swirling electronic sound clusters.

audacity 8-track screenshot

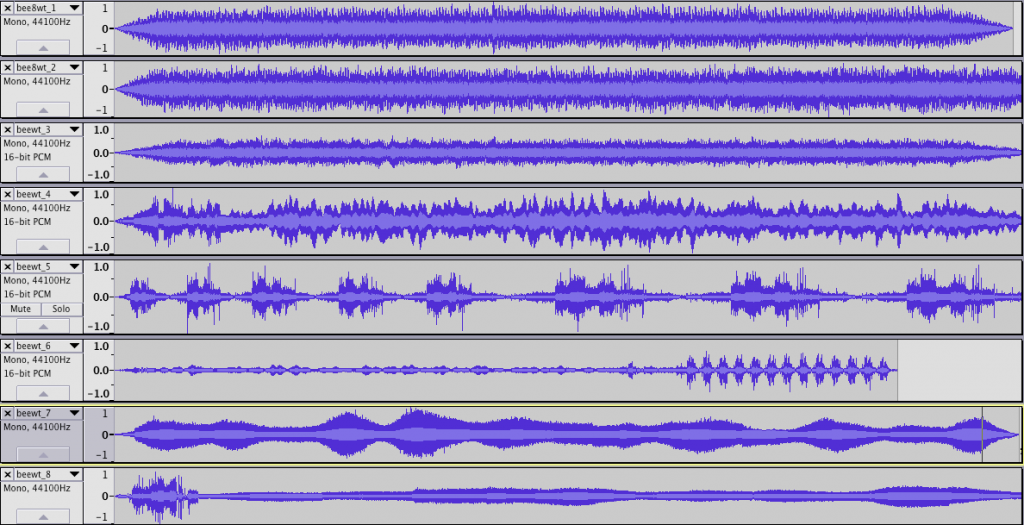

Analysis & synthesis by Givan Bela: Bees along the WatchTower

Beewatch-T8 is a spectral remapping, with a fast and layered but vectorized kind of sample and hold technique based on estimated pitches sliding one into another, imagining another world, if you think of the fact that bees maybe don’t hear themselves buzzing, and hissing, or whirring, but that they’d rather feel the vibrations in rich sonic patterns all around telling them …

audacity 8-track screenshot